O, Syntax Tree

In conversations about language, trees feel like part of the landscape. Historical linguists have worked hard at reconstructing family trees for the world’s languages, with all their branching groups. Etymologists love to dig for the roots of words, and trace the way they tend to branch across time and space as well. Believe it or not, some experts are even trying to “translate” the language of trees, by decoding the way they communicate through the forest’s mycelium floor. This Language Matters instalment spotlights a particularly iconic example of trees in linguistics: the syntax trees used to model sentence formation.

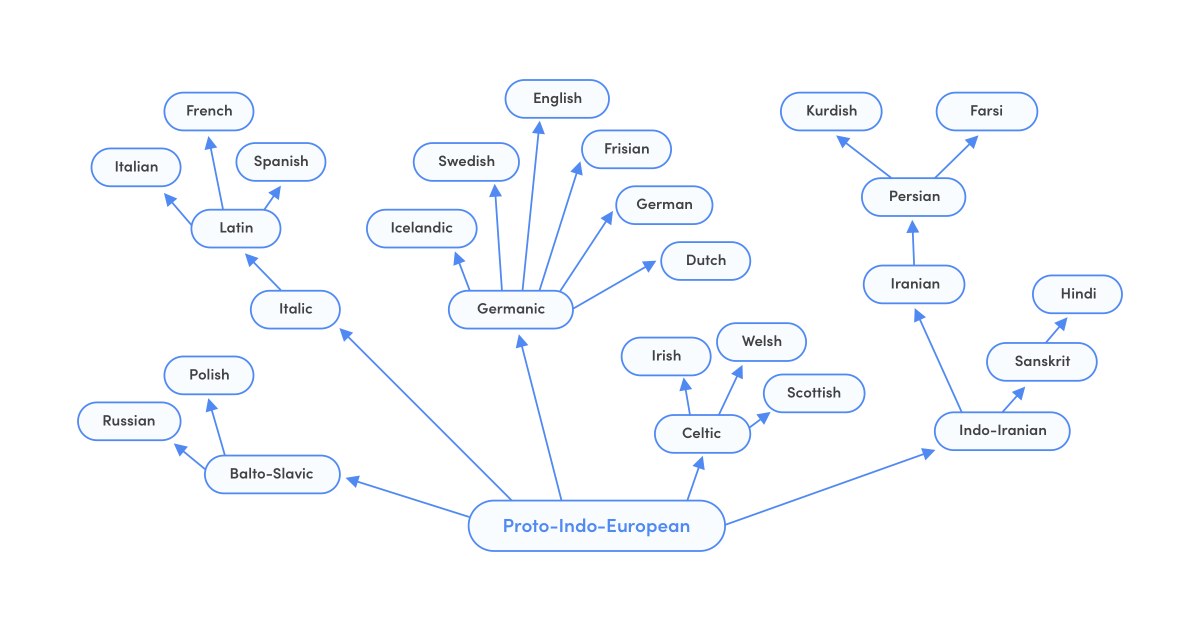

A family tree for Indo-European languages

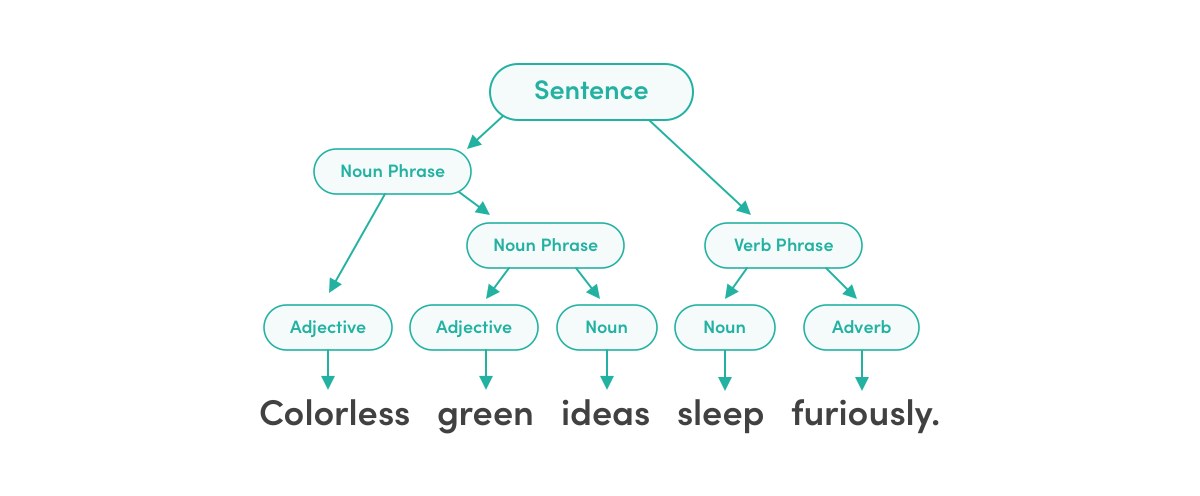

Syntax refers to the way a sentence’s constituent parts are ordered. Linguists borrowed the term from the ancient Greek grammarians who talked about suntaksis (“orderly arrangement”) in well-turned phrases, but the careful study of syntax is actually a recent development. Noam Chomsky jump-started the field with his book Syntactic Structures (1957), stressing that syntax has an implicit logic all its own that we automatically recognize and respect in arranging our words. We even understand this structure when meaning is stripped away: “Colourless green ideas sleep furiously” was Chomsky’s famous example of a sentence that makes perfect syntactic sense, without making much semantic sense. One way Chomsky tried to investigate the implicit logic of syntax involved visual maps that branch to show how constituent parts of our sentences form units and interact with each other. Our words don’t float around like lonely individual atoms, after all. They tend to form significant structures like “noun phrases” (the itsy bitsy spider) and “verb phrases” (climbed up the water spout), and these units go on to hang together and interact like molecules in ever larger and more complex superstructures.

The “Chomskyan Revolution” got linguists all over the world drawing syntax trees or parse trees, diagramming the implicit logic of natural sentences in search of underlying order—and maybe even a secret master syntax at the heart of human language ability. Nobody has yet succeeded in discovering a “universal grammar” hardwired into the human brain, but the investigative tool of the parse tree really took root and bore fruit, so to speak, in fields like linguistics research and computerized language correction.

A syntax tree

Language Research

In academic linguistics research, the work of drawing and comparing syntax trees has driven insights like the recognition that English is a “head-first” language: English has prepositions as opposed to the postpositions favoured by languages like Japanese: we talk about putting our sentences in order, as opposed to putting them order in. And unlike Japanese, English has a marked tendency to place a verb before the object of a sentence: Anglophones like citing examples as opposed to examples citing. Tracing such patterns helps illuminate the way languages are shaped by “deep structures”—they’re not just random collections of arbitrary rules that speakers learn by rote in isolation, one by one. Such insights are of course theoretically interesting, but they can also sometimes come in handy for the practical business of “getting it right” in real-world usage. Tree diagrams allow us to visualize the way the constituents of language come together in natural units and interdependent patterns, which can help us see in concrete terms why some constructions “work” and others don’t. This is where language correction comes in.

Language Correction

The more familiar you become with the way natural syntax organizes patterns within patterns, the easier it gets to express yourself in natural and grammatical ways. If you are a Japanese English-as-a-second-language student, for example, who recognizes English as a strongly “head-first” language, right away you will be less tempted to string together a sentence like Sentence my orderly is, using Japanese syntax. You will be able to guess correctly how strange that might sound to native English speakers—just as strange as Japanese conversations would sound if you strung them together using standard English syntax. The advantage of language correction software is similarly built upon the recognition of deep, meaningful patterns within patterns.

A famous example of syntax divorced from semantics

Antidote’s team of computational linguists use state-of-the-art methods to make sense of written language, and thereby provide users with helpful advice on what works and what doesn’t in a given text. The generation and analysis of syntax trees is one powerful tool in this toolbox. Antidote’s users can even click on a parse tree icon at the top of the corrector window, to see for themselves how the parts of their sentences are syntactically interdependent.

For our purposes here, the image of interdependence is an evocative one, since the verb depend comes from the Latin dependere, meaning literally “to hang from”. If we picture syntax in terms of interdependence, then, we can imagine syntax less in terms of arbitrary rules to obey and more in terms of a living, branching system where things just naturally want to “hang together” as balanced supports and counterweights. Approaching sentences as collections of moveable branches can help in basic things like formulating English questions, for example: The best case scenario is my primary concern, Janice. Where is the best case scenario in this chart? Developing a sense for the arrangement of such units can also help with subtler questions of nuance and relative emphasis. It sounds much more affirmative and decisive, for example, to conclude a speech by saying This idea is very risky but it’s our best option, as opposed to wrapping up by saying This idea is our best option although it is very risky… People are far more likely to respond positively to the first way of expressing such a recommendation! From this point of view, syntax trees are a graceful and useful gift from language experts to language lovers. They shed light on the sweet spots where the elements of our language can be meaningfully and elegantly hung.